The Future of AI in OTT Streaming: How Artificial Intelligence is Transforming the Industry

The Over-the-Top (OTT) streaming industry is undergoing a massive transformation, and at the heart of this evolution is Artificial Intelligence (AI). From hyper-personalized recommendations to AI-assisted content creation and real-time multilingual translations, AI is reshaping how we consume, create, and distribute video content.

In this blog post, we explore the biggest AI-driven innovations in OTT and how they are shaping the future of streaming.

AI-Powered Personalization: The Next Level of Content Discovery

One of the most visible uses of AI in OTT streaming is personalized content recommendations. Gone are the days of static recommendations based solely on past watch history. AI-driven algorithms now leverage real-time data analysis to enhance content discovery and user engagement.

How AI is Enhancing Personalization

- Machine Learning-Based Recommendations: AI analyzes watch history, search behavior, in-app interactions, and even viewing duration to provide highly personalized recommendations. Platforms like Netflix, Disney+, and Amazon Prime Video use these algorithms to improve user experience and retention.

- Context-Aware AI: Future OTT platforms will use biometric sensors, weather data, and real-time mood analysis to suggest content based on a user’s emotional state, location, and time of day.

- AI-Powered Smart Trailers & Summaries: AI will automatically generate personalized trailers, focusing on elements (action, drama, humor) that align with an individual user’s interests.

What’s Next?

By 2025, AI-driven content personalization will evolve beyond just recommendations. AI will start modifying content in real-time, offering different versions of a movie or TV show depending on user preferences (e.g., faster pacing for action lovers, extended dialogue for drama enthusiasts).

Modern Mechanisms of Recommendation Engines

Collaborative, Content-Based, and Session-Based Models

Modern recommendation engines combine collaborative filtering (user-user, item-item), content-based models (deep metadata analysis), and session-based models (real-time behavior tracking). Together, these systems personalize recommendations even for new users or niche content categories.

Diversity, Serendipity, and Decision-Fatigue Controls

AI platforms now apply re-ranking algorithms to avoid repetitive suggestions and reduce decision fatigue. By tracking “no-selection” exits and “time-to-first-play,” platforms ensure diverse and serendipitous content discovery, keeping the viewer engaged longer.

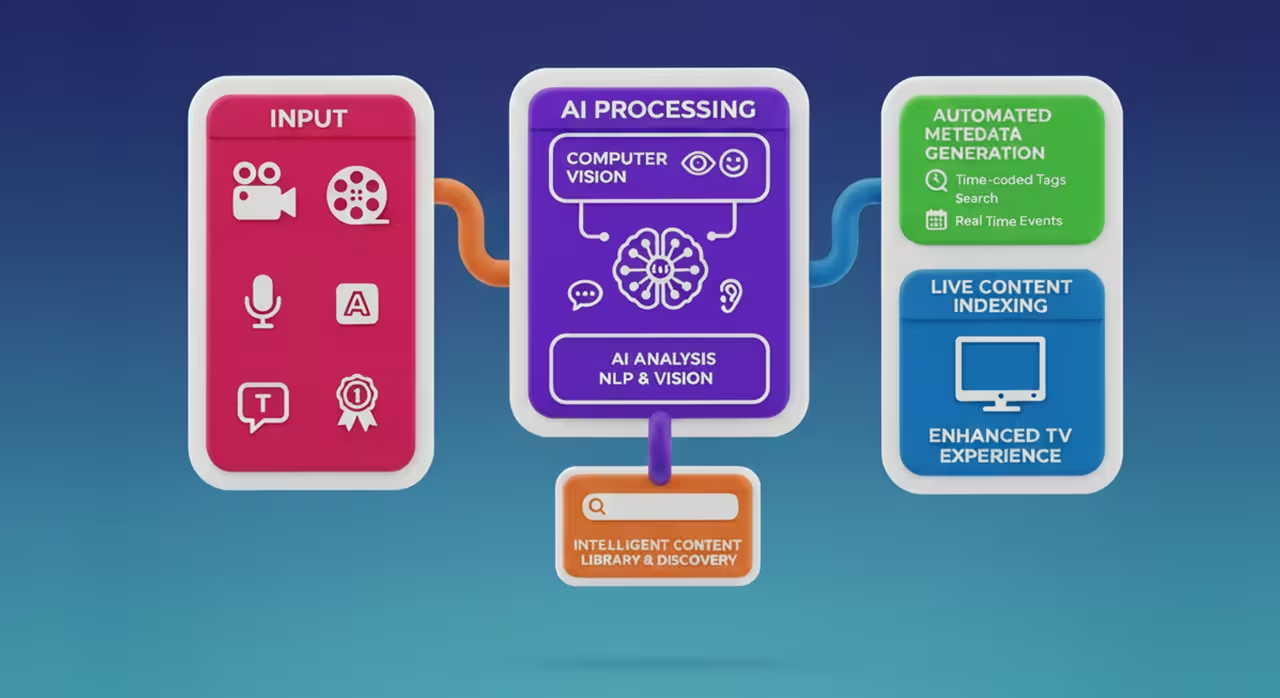

Frame-Level Content Indexing & Intelligent Metadata

Traditional tagging can’t keep up with today’s massive content libraries. AI solves this through frame-level content indexing and intelligent metadata generation using computer vision and NLP.

Automated Metadata via Computer Vision & NLP

AI detects faces, logos, objects, text (OCR), and emotions at the frame level. This allows platforms to create deep, time-coded metadata, making content searchable and enabling new discovery experiences like scene-based recommendations.

Live Content Indexing & Intelligent Archiving

For live streams and sports, AI identifies key moments, clusters highlights, and enriches archives automatically. This ensures long-tail discoverability, allowing platforms to resurface relevant clips months or years later.

Smart Video Search with Semantic Understanding

Semantic Search vs. Keyword Matching

Traditional keyword search often fails when users don’t know the exact title. Semantic search understands the meaning behind queries. It uses embeddings, context ranking, and multi-signal scoring to surface relevant results even with vague or incomplete queries.

In-Video Search & AI-Generated Chapters

AI streaming services now let users jump to exact moments within a video. AI auto-generates chapters, scene descriptions, and preview thumbnails, improving user navigation and completion rates.

AI in Content Creation: From Scriptwriting to Video Editing

AI is not just recommending content, it’s creating it. The rise of text-to-video models like OpenAI’s Sora and DeepBrain AI is proving that AI can write, edit, and even produce video content autonomously.

How AI is Revolutionizing Content Production

- AI-Assisted Scriptwriting: AI tools like OpenAI’s GPT are helping scriptwriters develop storylines, generate dialogue, and suggest creative plot twists.

- Automated Video Editing: AI-powered editing tools can analyze raw footage and automatically select the best cuts, transitions, and effects thus dramatically reducing post-production time.

- Deepfake & AI-Generated Actors: AI can now recreate actors’ faces, voices, and expressions, making it possible to produce content without the need for live actors. Companies like Metaphysic AI are already experimenting with deepfake technology for cinema and streaming.

What’s Next?

By 2025, AI-generated short films and even full-length AI-powered TV series will become a reality. AI-powered interactive narratives will let viewers customize storylines, creating a personalized cinematic experience.

Automated Content Moderation & Safety

Policy Violations

AI moderation tools detect nudity, violence, or hate speech using multi-modal analysis. Risk scoring models map policy violations across regions, ensuring compliance and user safety at scale.

Live-Stream Safeguards

Live-stream safeguards include AI delay buffers, escalation workflows, and audit trails for transparent decision-making. This minimizes harmful content exposure without stifling creative freedom.

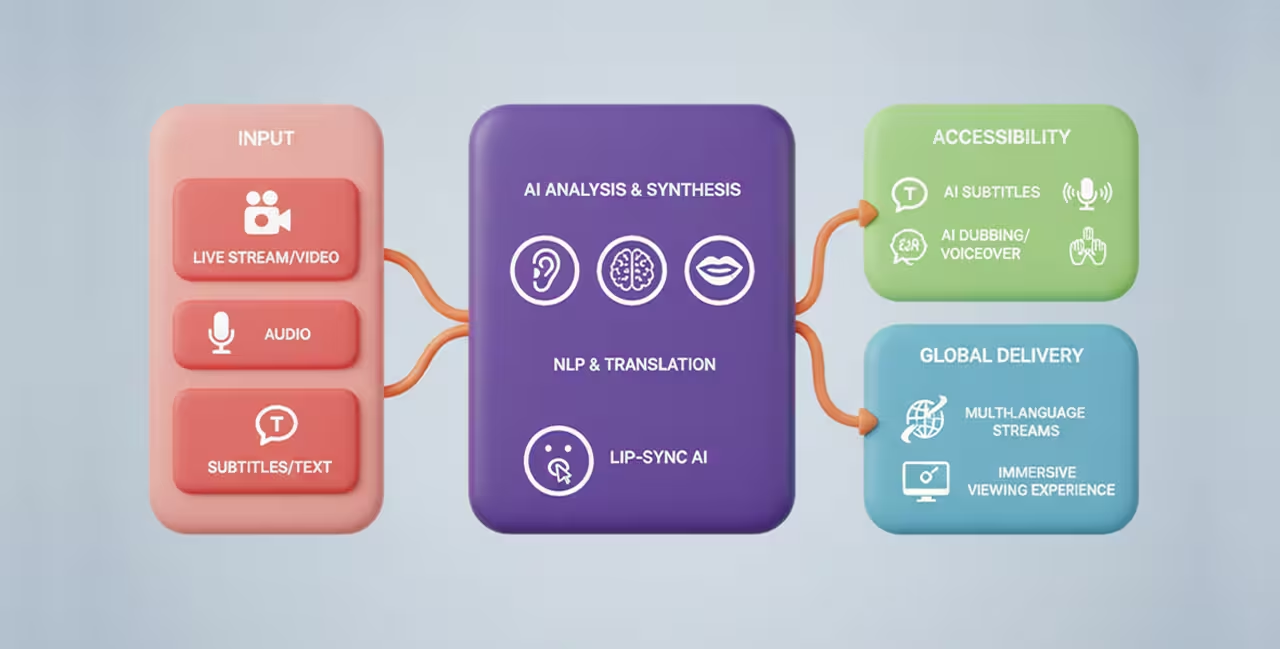

AI for Accessibility & Multilingual Content

AI is breaking language barriers in streaming, making global content more accessible than ever.

Key AI-Driven Advancements in Accessibility

- AI-Powered Subtitling & Dubbing: AI tools like DeepDub and Resemble AI can create real-time, high-quality translations and voiceovers for content in multiple languages.

- Lip-Sync AI for Dubbed Content: Traditional dubbing often suffers from mismatched lip movements. AI can automatically sync actors’ lips to new languages, making dubbed content feel more natural and immersive.

- AI-Generated Sign Language Interpreters: AI models are being trained to automatically translate spoken words into sign language avatars, making OTT platforms more accessible for deaf and hard-of-hearing viewers.

What’s Next?

By 2025, AI-powered localization will make it possible to instantly translate any movie or series into multiple languages, eliminating the time-consuming and expensive manual dubbing process.

Technical Components of AI Localization

Localization is more than just translation; it's about making content resonate with a local audience. In the age of AI, this process has been revolutionized by sophisticated technologies that ensure accuracy, cultural relevance, and an immersive user experience. Here, we delve into the core technical components that power modern AI localization: Automatic Speech Recognition (ASR), Neural Machine Translation (NMT), and Text-to-Speech (TTS).

Speech Recognition (Transcription & Diarization)

The journey of localizing audio or video content begins with Speech Recognition, a crucial first step that transforms spoken words into text. This involves two key processes:

- Transcription: Advanced ASR systems are trained with accent-specific lexicons and vast datasets to ensure highly accurate transcription. They go beyond simply converting audio to text by accurately capturing nuances like punctuation, capitalization, and even specific domain terminology. This precision is vital, as any errors at this stage can cascade through the entire localization workflow.

- Diarization: Equally important is diarization, the process of identifying and labeling different speakers in an audio recording. This means the ASR system can distinguish who said what, which is critical for creating a natural and understandable localized transcript. Accurate speaker labeling ensures that subsequent translation and voice synthesis maintain the original conversation flow and context.

Neural Machine Translation (NMT)

Once the speech is accurately transcribed, the textual content moves into the realm of Neural Machine Translation (NMT). This is where the magic of transforming language, while preserving its essence, truly happens. Modern NMT models are far more sophisticated than their rule-based or statistical predecessors, offering:

- Cultural Nuance: Unlike literal translation, which can often sound awkward or even offensive, advanced NMT models are trained on massive multilingual datasets that enable them to understand and respect cultural nuances. They can adapt idioms, proverbs, and social conventions to ensure the translated content feels natural and appropriate for the target audience.

- Brand Terminology: Maintaining consistent brand voice and terminology across different languages is paramount for global businesses. NMT systems can be customized with specific glossaries and style guides, ensuring that brand-specific terms, product names, and marketing messages are translated accurately and consistently, reinforcing brand identity in every market.

- Regional Language Context: Languages often have regional variations and dialects. Sophisticated NMT models can be fine-tuned to specific regional contexts, ensuring that the translation speaks directly to the local audience, avoiding generic or out-of-place language. This level of localization enhances engagement and makes the content feel truly bespoke.

Text-to-Speech (TTS)

The final stage in this AI localization pipeline is Voice Synthesis or Text-to-Speech (TTS), where the translated text is transformed back into natural-sounding speech. This is about recreating the original content's emotional tone and delivery, ensuring an immersive experience.

The power of modern TTS systems lies in their integration with Speech Synthesis Markup Language (SSML). SSML allows for precise control over various aspects of the synthesized voice, enabling developers and localization experts to fine-tune the output.

Using SSML controls, TTS systems can deliver highly expressive and engaging voiceovers that maintain the content's quality and immersion, effectively completing the localization journey from one language and culture to another.

Advanced AI Dubbing Mechanisms

In the world of content localization, achieving a truly immersive and authentic experience goes beyond simple translation. Advanced AI dubbing mechanisms, particularly viseme alignment for lip-sync and sophisticated voice cloning, are revolutionizing how audiences consume international content.

Viseme Alignment & Speaker Identity Preservation

Imagine watching a dubbed film where the characters' mouths perfectly match the spoken words. This seemingly magical feat is made possible by AI's ability to perform viseme alignment. Visemes are the distinct visual shapes formed by the mouth and face when producing specific sounds. AI analyzes these visual cues in the original footage and precisely matches them with the translated speech. The result is a natural, synchronized dubbing experience that significantly enhances viewer immersion and believability, minimizing the often-distracting disconnect of traditional dubbing.

Consent, Rights, and Cultural Accuracy

While the potential of voice cloning is immense, allowing for the preservation of original speaker identity and vocal nuances, its implementation comes with crucial ethical considerations. Voice cloning requires explicit rights management and rigorous editorial review. This is not merely a legal formality; it's about respecting individual identity, the cultural context of the original content, and ensuring full legal compliance. Responsible AI dubbing prioritizes obtaining clear consent from individuals whose voices are cloned and meticulously reviewing the output to prevent misuse or misrepresentation. The goal is to leverage this powerful technology to enhance the dubbing experience while upholding ethical standards and cultural sensitivity.

AI in Monetization: Smarter Ad Targeting & Revenue Optimization

OTT platforms are increasingly using AI-powered advertising and monetization strategies to boost revenue and optimize ad performance.

How AI is Enhancing OTT Monetization

- Dynamic Ad Insertion (DAI): AI personalizes ad experiences by dynamically inserting targeted ads based on viewer demographics, location, and past behavior.

- AI-Driven Interactive Ads: Streaming services are leveraging AI-powered interactive ads that allow users to engage with the content turning passive ad consumption into an immersive experience.

- Shoppable Video Content: AI is integrating e-commerce with streaming, allowing viewers to click on products featured in a movie or show and purchase them instantly.

What’s Next?

By 2025, AI-driven programmatic advertising will ensure that ads feel less intrusive and more relevant, increasing engagement and revenue for OTT platforms.

AI-Optimized Streaming

Predictive ABR, Multi-CDN Orchestration & Latency Minimization

Nothing is more frustrating than buffering or a slow startup. AI is revolutionizing how we deliver content by enhancing adaptive bitrate (ABR) streaming and enabling smarter Content Delivery Network (CDN) routing. This intelligent approach dynamically reduces buffering, slashes startup times, and crucially, adapts to the unique conditions of regional Internet Service Providers (ISPs). By predicting network fluctuations and user device capabilities, AI ensures that viewers receive the optimal stream quality at all times.

AI for Quality of Service (QoS) & Predictive Troubleshooting

Beyond just delivery, AI is transforming how we maintain and improve the overall Quality of Service (QoS). With proactive anomaly detection, streaming platforms can now predict and fix issues before they ever impact users. Imagine a system that can identify a potential server overload or a degradation in a specific region's network performance and automatically take corrective action without the viewer ever knowing there was a potential problem.

AI in Video Encoding, Delivery & Anti-Piracy

Content-Aware Encoding & Bitrate Reduction

One of the most significant applications of AI in video streaming is content-aware encoding. Traditionally, video encoding uses a fixed bitrate, regardless of the complexity of the scene. This often leads to over-encoding of static scenes (wasting bandwidth) and under-encoding of dynamic scenes (resulting in lower quality).

AI-powered encoding, however, intelligently analyzes the content of each frame. It can differentiate between highly dynamic, action-packed sequences and static scenes with minimal movement. By understanding the visual complexity, AI optimizes bit allocation, dedicating more bits to moving scenes to maintain pristine quality and fewer bits to static frames where visual changes are minimal. This dynamic allocation maintains a consistent high quality for viewers while significantly cutting bandwidth costs for content providers. The result is a more efficient streaming process, reducing operational expenses without compromising the viewer's experience.

Watermarking, Fraud Detection & Piracy Prevention

Piracy remains a significant threat to the content industry, leading to substantial revenue losses. AI offers powerful tools to combat this challenge, providing robust solutions for content protection and fraud detection.

AI-driven systems can analyze vast amounts of data to identify sophisticated restreaming patterns, which are often indicative of illegal distribution. By detecting anomalies in viewing habits and content access, AI can pinpoint compromised accounts and unauthorized re-broadcasts. Furthermore, AI enables the implementation of dynamic watermarking. Unlike static watermarks, dynamic watermarks can be subtly embedded into the video stream in a way that is unique to each user or session. If content is illegally redistributed, the dynamic watermark can be traced back to its origin, identifying the source of the leak. This not only acts as a powerful deterrent but also provides crucial evidence for legal action, significantly enhancing the security of content distribution and protecting intellectual property.

What Does the Future Hold for AI in OTT?

Looking beyond 2025, AI will completely redefine the OTT experience.

Upcoming AI Innovations in Streaming

- AI-Powered Virtual Hosts & Influencers – AI-generated characters could host sports broadcasts, news segments, or interactive shows in real-time.

- Voice-Controlled Smart Streaming Assistants – Users will be able to ask AI to summarize a movie, recommend a scene, or even create a custom movie based on personal preferences.

- AI-Generated Sports Commentary & Real-Time Highlights – AI will analyze live sports footage and automatically generate dynamic commentary and highlight reels.

- AI in Interactive & Immersive Storytelling – Viewers will have the power to influence narratives in real-time, making AI-driven storytelling a revolutionary entertainment format.

Final Thought: Are We Ready for an AI-Powered Streaming Future?

AI is reshaping the entire industry. From hyper-personalization and AI-generated content to real-time translation and AI-powered monetization, the OTT industry is on the brink of a massive transformation.

OTT operators and content creators must start preparing now to leverage AI tools, stay competitive, and embrace the future of intelligent streaming.